YottaDB r2.02 includes a number of features and enhancements that make YottaDB easier to use, and more like other Linux programs. For example:

- With `ydbsh`, you can create shebang style scripts with M code.

- SOCKET devices support TLS connections using server certifications that do not require a password, such as those issued by Let’s Encrypt.

- Several optimizations to speed up Boolean operations.

In addition to enhancements and fixes made by YottaDB, r2.02 completes the merging of V7.0 GT.M versions into the YottaDB code base, GT.M V7.0-002, V7.0-003, V7.0-004, and V7.0-005, as described in the Release Notes.

Comparing YottaDB and Redis Using 3n+1 SequencesTL;DR: Performance Comparisons has instructions for you build a Docker container that allows you to make a side by side comparison of RedisⓇ,[1] Xider™,[2] and YottaDBⓇ. The image above is a screenshot of Xider and YottaDB outperforming Redis with a 32-process workload.

In 2025, the Journal of Computing Languages (JCL) plans a special issue recognizing 30 years of Lua. Since YottaDB has a native Lua API, we have submitted an article to JCL for that issue. A preprint of that article is on arXiv. The article compares the Redis and YottaDB APIs, and delves deeper into the performance comparison described below.

The choice of workload is important when benchmarking databases.

- A realistic benchmark must perform a large number of accesses, in order to remove timing jitter.

- Accesses must be different types, as real world workloads are not monolithic in their databases accesses.

- The benchmark must run on a variety of computing hardware.

- The benchmark must give consistent results on repeated runs.

- The workload should be simple and understandable.

Computing the lengths of 3n+1 sequences meets these requirements. Given an integer n, the next number in the sequence is (a) n÷2 if n is even, and (b) 3n+1 if n is odd. The Collatz Conjecture, one of the great unproven results of number theory, asserts that all such sequences end in 1, i.e., there are no loops, and no sequences of infinite length. For example:

- 3 → 10 → 5 → 16 → 8 → 4 → 2 → 1

- 13 → 40 → 20 → 10 → 5 → 16 → 8 → 4 → 2 → 1

Note that the sequences starting with 3 and 13 both meet at 10. If there are two processes, one computing the lengths of 3n+1 sequences starting with 13, and the other those of sequences starting with 3, and if they use a database to store the results as they work, then the process which reaches 10 later can simply use the results of the earlier process which has already computed and stored the results for the rest of the sequence.

The picture[3] is a visualization of the 3n+1 sequences of the numbers through 20,000, illustrating the convergence of the sequences, with all eventually converging on 1.

The problem is simple enough for programs to be easily written in virtually any language. While our intent in our blog post Solving the 3n+1 Problem with YottaDB was to compare programming languages, the programs can also be used to compare databases, and database APIs. In particular, we can compare Redis, YottaDB, and Xider. Xider is an API layer that allows YottaDB to provide applications with a Redis-compatible API, with two options:

- over TCP using the Redis Serialization Protocol (RESP); and

- for databases residing on the same machine as the client, we offer a direct connection to the database engine using Python and Lua APIs. The API is the same as the TCP / RESP API, except that it calls the database engine using our in-process native API calls.

In addition to the above two comparisons, which use the same program for Redis and Xider, there are also Lua, Python, and M programs that directly access the database, i.e., without going through a Redis compatible API layer.

We invite you to compare Redis, Xider, and YottaDB for yourself, and send us your comments.

[1] Redis is a registered trademark of Redis Ltd. Any rights therein are reserved to Redis Ltd. Any use by YottaDB is for referential purposes only and does not indicate any sponsorship, endorsement or affiliation between Redis and YottaDB.

[2] As Xider is still under development, not all Redis functionality is available. Any implemented functionality is upward compatible with Redis. Since Xider is continuously released, if functionality your application needs is not available, please check whether there is an Issue for it, and create one if there isn’t one. You can create a GitLab notification to stay informed with Xider updates.

[3] Picture generated using The Collatz Conjecture and Edmund Harriss’s visualisation tool.

We thank Kurt Le Breton for his first blog post, and hope there are many more to follow. If you would like to post on the YottaDB blog please contact us at info@yottadb.com.

A New Hope: The Emergence of MUMPS and YottaDB

In a computer universe not so far far away…

It is a time of great innovation.

Revolutionary technology is emerging from the depths of computing history, where storage space and code efficiency were once the ultimate challenges.

In the midst of this revolution, a succinct though ancient language, born from the constraints of early computing, stands as a beacon of simplicity and robustness.

MUMPS emerges from its historic confines on a unique mission: to engage in an epic battle against a squadron of transactions, overcoming all conflicts with unparalleled grace.

As millions of processes vie for victory in a grand auction of galactic proportions, the force of YottaDB’s internal transaction management triumphs, showing how old design constraints can lead to new levels of resilience.

A Chatbot, a Guest Writer, and a Coding Journey

Dramatic? Maybe a little over the top. Corny? Yeah, of course, but what self-respecting sort of nerd would I be without some sci-fi references! I do hope to have piqued your curiosity though, and yes, there will be an epic battle of transactions before we conclude, so stay tuned.

Truth be told, I’m genuinely surprised to be writing this today. I didn’t expect a spur-of-the-moment email to the YottaDB team would result in a request from Bhaskar to be a guest writer. Seriously, I’m a coder, not a wordsmith, so I apologize for getting ChatGPT to help out with the introduction (though I must admit, I’m partly responsible for the corniness).

ChatGPT! Yes, this whole episode started with AI – it got me into this mess in the first place. I promise, though, this is not a story about AI but rather … well … let me rewind.

Back in the late 90’s when I landed my first programming job it was with a medical technology company in Australia writing hospital information systems and then shortly afterwards I was with another firm writing laboratory ones. You can probably guess by now that at the core of both these products was MUMPS. That was my first introduction to the technology.

Now, I do know that the ANSI Standard refers to the language as M, however since I come from the world of medical software, I’m allowed a little case of the MUMPS now and then. So in the spirit of Bhaskar’s presentations, I’ll be keeping those mustard stains on my tie. But I digress…

Since I was fresh out of college, super green, and a little naive, I asked our database engineer why the company didn’t use XYZ Brand relational database. The short answer: it just wouldn’t cut it. At the time, I felt this wasn’t much of an explanation, but I did become curious.

I’m not quite sure what sparked my interest, but the hierarchical structure really appealed to me. Even today, I can’t fully explain why, but I’m still captivated by MUMPS. Bitten by the bug so to speak … I know, bad joke … perhaps I will change that tie after all! Thinking on it now, I would describe M as unique: it’s a little rough around the edges and yet, I think, remains fascinating and compelling even today.

So where is this all leading, you ask? Specifically to an implementation detail in GT.M, and by extension YottaDB, that truly stands out. It’s a hidden gem, hinted at in M’s language semantics, but only fully realized through GT.M’s engineers. I’ll delve into details a bit later.

But first let’s jump back to ChatGPT and the very start of our story.

The Hidden Gem: YottaDB’s Conflict Resolution

I enjoy coding! I really do. Like most programmers, I have my fair share of “pet” projects to work on. As a long-time M geek, it’s no surprise that at least one of those is M-based. And with YottaDB being open-sourced, it was the natural choice, since hobbies rarely generate income.

But, as any developer with niche interests knows, it can get a bit lonely in the wee hours of the morning when there’s no one around to bounce ideas off. So, of course I ended up talking to a chatbot!

It was during one of these moments with ChatGPT, while weighing the pros and cons of a decision I was stuck on, that the conversation delved into the internals of YottaDB’s transaction handling, particularly its automatic conflict resolution and retry mechanism.

And herein lies the hidden gem I wish to explore with you all.

A Reality of Relational Databases

But before we dive in, please let me take another quick step back in time. I didn’t spend my entire career with M-based companies. Eventually, I moved on and found myself working in the more ubiquitous world of relational databases. As capable as these systems are, I always felt something was missing. For certain data needs, I knew a more elegant way to model it would be hierarchically. However, I was stuck normalizing everything into a trillion tables.

I really missed M, but now I was working in the relational world and had to code within the constraints of that architecture. Speaking of those constraints, let me state an obvious, but crucial, point: relational databases are self-contained products. Your data is separate from your application logic. Hence the database has no understanding of how other software uses that data; it simply responds to the requests it’s given.

It simply responds, until it can’t!

Write issues are generally rare for any system under light load. But, just occasionally, one process might commit its transaction before another can, resulting in a concurrency violation. The database doesn’t have enough context to manage this for you and so its responsibility rightly ends in maintaining data integrity.

All databases handle failed requests through various mechanisms, but ultimately, it’s your code that will face the brunt of those errors. The hot-potato is always thrown back to the application developer. It’s a significant amount of error handling to manage in addition to your primary code. The main point is, issues intensify under heavier and heavier load.

In the relational world, this is just an accepted reality.

YottaDB’s Approach: Seamless Conflict Handling

But with YottaDB, a different reality awaits you.

At the heart of a YottaDB based system is the integration of code execution and data access from within the same process. This tight coupling, required by the M language, lays the foundation for YottaDB’s robust resolution mechanism.

Building on this, YottaDB also employs an optimistic concurrency strategy, which operates on the assumption of success and is highly performant most of the time. When inevitable conflicts occur, and multiple processes attempt to update the same piece of data, YottaDB steps in like a police officer directing traffic. It allows one process to proceed, then the next, until everything is resolved.

How? Well, you see, YottaDB has enough context to automatically manage this for you. Which approach it takes depends on whether you’re using the C-API with modern languages, or writing traditional M code. For now let’s keep a chronological flow and first focus on M to explore how the language specifies transactional semantics.

How M Specifies Transactions

A transaction begins with the command:

tstart

Your logic can then modify both variables and database entries. When it’s ready to finalize the transaction, it issues:

tcommit

Now here’s when YottaDB’s hidden gem becomes apparent: when you use a modified tstart

and activate the automatic retry mechanism. On write conflicts, YottaDB presses its hidden rewind button: not only are database changes undone, but your application logic also rolls back to the tstart checkpoint. Depending on the transaction variant you use, some, none, or all local variables can be reverted to their checkpoint-time values. Once that’s done, your code runs again. Often, a retry will succeed with a tcommit, but in cases of persistent conflict, YottaDB dons its police officer cap to decide the order of resolution.

Please pause to ponder how cool this is.

Can you think of a strategy to retro-fit this ability into traditional databases? Neither can I.

Now, briefly, here are the variations that control how, if any, local variables are reset along with database changes. The upcoming code sample will showcase two of these.

- tstart * – All local variables on the stack will be reset on every retry.

- tstart () – No local variables will be reset at all.

- tstart (a,b,c) – Only variables a, b, and c will be reset on each retry.

Why Developers Can Relax: YottaDB Has You Covered

So what’s left for the application developer to handle? Not much really. YottaDB manages conflict resolution for you, allowing you to focus on crafting simple business logic.

Its ability to handle conflicts gracefully is a core strength, ensuring robustness even under heavy load. While it might slow down during resolution, it happily works through your code to completion.

Reaching Out to the YottaDB Team

Thanks for sticking with me this far – your patience is about to pay off as our grand auction of galactic proportions starts soon.

But first, let me circle back to that AI conversation. I was surprised this unique capability wasn’t a bigger part of YottaDB’s marketing and remarked about that to ChatGPT. Its response piqued my interest enough that I decided to pass the insights along to the team.

In a world where developers will choose between NoSQL and relational databases, YottaDB’s ability to handle conflicts seamlessly is a huge differentiator – if only more people knew about it.

That’s why I reached out to the YottaDB team – I wanted to ask that this powerful feature got the attention it deserves. And as fate would have it, Bhaskar decided I was the right person to help spread the word. So here I am, not only showing why YottaDB is great but also sharing how a single email and a meddling AI can turn into a full-blown article!

Prepare for Battle: Bid Wars Begins

And now, the moment we’ve been waiting for has arrived. The epic showdown between a squadron of transactions as they battle for supremacy in a galactic-scale auction is about to commence. Buckle up, and let’s get our M code ready for this thrilling mission!

Breaking Down the Bid Wars Code

(Click on the image to access the code. Note: the code kills the ^Action global variable.)

The BidWars.m auction system simulates a highly concurrent bidding environment where many bidders compete to place bids on an item, in this case, our favourite astromech droid. The system launches a specified number of concurrent bidder processes, each trying to outbid the others by incrementing the auction price. This sample showcases YottaDB’s transaction mechanisms when handling contentious access and updates.

- Bidders: The system starts by launching a configurable number of bidders, each represented as an independent process.

- Concurrency: Once all bidders are launched, the auction begins, and each bidder attempts to become the leader by placing higher bids.

- Bid Placement: Each bidder process checks whether it’s the current auction leader. If not, the process places a new bid, incrementing the auction price randomly by a small amount.

- Average Time Calculation: Throughout the auction, the system calculates and logs the average time taken per bid.

- Final Bid: Once the auction duration is complete, the system waits for the remaining bidders to finish and announces the final winning bid, along with key statistics like total bids received and bids per second.

- Live Updates: As the auction progresses, the system provides real-time performance feedback on the current price, total bids, and average time per bid.

A Simpler, Cleaner Way to Handle Conflicts

The entire auction process demonstrates YottaDB’s capability to manage concurrent transactions and handle bidding conflicts gracefully, ensuring the auction runs smoothly despite a frenzy of bidders.

As you examine the source code, you might notice there’s no error handling. This isn’t an oversight but rather a deliberate choice so I could highlight this under-appreciated gem. From my perspective, it’s fascinating how YottaDB handles writes so seamlessly you don’t need to include error-handling code for them.

For those not familiar with M, I hope you find the code approachable and clear. The real value here is in YottaDB’s ability to manage conflicts internally, which simplifies development and keeps your code clean. While error handling remains important in other contexts, the fact that YottaDB takes care of conflicts automatically is a powerful feature that I believe deserves more attention.

YottaDB’s Legacy

In reflecting on the design and elegance of this product, it’s remarkable to consider its origins. Born out of the need to maximize efficiency and keep things small due to the constraints of early hardware, it emerged with simplicity and robustness. Despite the passage of half a century and the evolution of modern database systems, no contemporary solution has yet matched the seamless ease of use that YottaDB offers in the area of handling write conflicts.

The necessity of those early days has shaped YottaDB into something truly special. As I look at the current landscape of database technologies, it’s a testament to the brilliance of those early engineers that their effortless conflict resolution mechanism remains unmatched.

This legacy technology, forged from necessity, continues to stand out, reminding us that sometimes amazing innovations can hide in unexpected places.

About Kurt Le Breton

Kurt is a software engineer based in Australia. His career began in the medical software field, where he discovered his enthusiasm for hierarchical data storage. He later transitioned to developing specialized software for accounting workflows. Outside of programming, he enjoys photography, reading, and dabbling in bookbinding. Sitting around a campfire for an evening is one of the ways he likes to relax and take a break from the modern world.

- Photo of R2D2. Credit: Kristen DelValle, used under Creative Commons by 2.0

Octo now supports dates and times.

While the ability to store and process dates and times is essential to many data processing applications, they are perhaps the least standard basic functionality across SQL implementations, as shown by the following table from SQL Date and Time.

|

Example |

Format |

SQL |

Oracle |

MySQL |

PostgreSQL |

|

2022-04-22 10:34:23 |

YYYY-MM-DD |

|

|

DATETIME |

TIMESTAMP |

|

2022-04-22 |

YYYY-MM-DD |

DATE |

|

DATE |

DATE |

|

10:34:23 |

hh:mm:ss.nn |

TIME |

TIME |

TIME |

TIME |

|

2022-04-22 |

YYYY-MM-DD |

DATETIME |

TIMESTAMP |

|

|

|

2022 |

YYYY |

|

|

YEAR |

|

|

12-Jan-22 |

DD-MON-YY |

|

TIMESTAMP |

|

|

ISO 8601 is an international standard for dates and times that SQL implementations support.

Applications can have specialized needs for dates. For example, medical applications need to store imprecise dates, like “July 1978”, or just “1978” (for example, I know that my tonsils were removed in 1958, but I have no idea when in 1958). Fileman dates allow for storing dates with arbitrary levels of (im)precision. Specialized dates result in ad hoc implementations of dates when using SQL to access, e.g., as VARCHAR, INTEGER or NUMERIC types.

Octo provides several date and time types:

- DATE

- TIME [WITHOUT TIME ZONE]

- TIMESTAMP [WITHOUT TIME ZONE]

- TIME WITH TIME ZONE

- TIMESTAMP WITH TIME ZONE.

[WITHOUT TIME ZONE] means that the text is optional.

Formats that Octo supports are:

- TEXT (values such as ‘2023-01-01′ and ’01:01:01’)

- HOROLOG (values in $HOROLOG format)

- ZHOROLOG (values in $ZHOROLOG format)

- ZUT (integers interpreted as $ZUT values)

- FILEMAN (numeric values of the form YYYMMDD.HHMMSS), where

- YYY is year since 1700 with 000 not allowed

- MM is month; two digits 01 through 12

- DD is the day of the month; two digits 01 through 31

- HH is the hour; two digits, 00 through 23

- MM is the minute; two digits, 00 through 23

- SS is second; two digits, 00 through 59

Here is an example of a query using Fileman dates against a VistA VeHU Docker image, which has simulated patient data for training purposes which you can run and experiment with.

OCTO> SELECT P.NAME AS PATIENT_NAME, P.PATIENT_ID as PATIENT_ID, P.DATE_OF_BIRTH, P.Age, P.WARD_LOCATION, PM.DATE_TIME as Admission_Date_Time,

TOKEN(REPLACE(TOKEN(REPLACE(P.WARD_LOCATION,'WARD ',''),'-',1),'WARD ',''),' ',2) AS PCU,

CONCAT(TOKEN(REPLACE(TOKEN(REPLACE(P.WARD_LOCATION,'WARD ',''),'-',2),'WARD ',''),' ',1),' ',TOKEN(P.WARD_LOCATION,'-',3)) AS UNIT,

P.ROOM_BED as ROOM_BED, REPLACE(P.DIVISION,'VEHU ','') as FACILTY, P.SEX as SEX, P.CURRENT_ADMISSION as CURRENT_ADMISSION,

P.CURRENT_MOVEMENT as CURRENT_MOVEMENT, PM.PATIENT_MOVEMENT_ID as Current_Patient_Movement,

PM.TYPE_OF_MOVEMENT as Current_Movement_Type, AM.PATIENT_MOVEMENT_ID as Admission_Movement,

AM.TYPE_OF_MOVEMENT as Admission_Type

FROM PATIENT P

LEFT JOIN patient_movement PM ON P.CURRENT_MOVEMENT=PM.PATIENT_MOVEMENT_ID

LEFT JOIN patient_movement AM ON P.CURRENT_ADMISSION=AM.PATIENT_MOVEMENT_ID

WHERE P.CURRENT_MOVEMENT IS NOT NULL

AND P.ward_location NOT LIKE 'ZZ%'

AND P.NAME NOT LIKE 'ZZ%'

AND PM.DATE_TIME > timestamp'2015-01-01 00:00:00' LIMIT 5;

patient_name|patient_id|date_of_birth|age|ward_location|admission_date_time|pcu|unit|room_bed|facilty|sex|current_admission|current_movement|current_patient_movement|current_movement_type|admission_movement|admission_type

ONEHUNDRED,PATIENT|100013|1935-04-07|89|ICU/CCU|2015-09-10 08:38:18|| |ICU-10|DIVISION|M|4686|4686|4686|1|4686|1

TWOHUNDREDSIXTEEN,PATIENT|100162|1935-04-07|89|7A GEN MED|2016-06-26 20:24:39|GEN| ||CBOC|M|4764|4764|4764|1|4764|1

ONEHUNDREDNINETYSIX,PATIENT|100296|1935-04-07|89|7A GEN MED|2015-09-25 11:56:03|GEN| ||CBOC|M|4711|4711|4711|1|4711|1

ZERO,INPATIENT|100709|1945-03-09|79|7A SURG|2015-04-04 13:38:10|SURG| |775-A|CBOC|M|4672|4672|4672|1|4672|1

EIGHT,INPATIENT|100716|1945-03-09|79|3E NORTH|2015-04-03 11:25:45|NORTH| |3E-100-1|CBOC|M|4636|4636|4636|1|4636|1

(5 rows)

OCTO>

If your application has specialized dates, we invite you to discuss your requirements with us, so that we can extend Octo to meet your needs.

- Photo of Mayan calendar stone fragment from the Classical Period (550-800 CE) at Ethnologiches Museum Berlin. Credit: José Luis Filpo Cabana, used under the Creative Commons Attribution-Share Alike 4.0 International license.

- Photo of one of NIST’s ytterbium lattice atomic clocks. NIST physicists combined two of these experimental clocks to make the world’s most stable single atomic clock. The image is a stacked composite of about 10 photos in which an index card was positioned in front of the lasers to reveal the laser beam paths. Credit: N. Phillips/NIST

YottaDB r2.00 Released

YottaDB r2.00 is a major new release with substantial new functionality and database format enhancements.

- Inherited from the upstream GT.M V7.0-000, YottaDB r2.00 creates database files of up to 16Gi blocks. For example, the maximum size of a database file with 4KiB blocks is 64TiB, which means you can use fewer regions for extremely large databases. With YottaDB r2.00, you can continue to use database files created by r1.x releases, except that the maximum size of a database file created with prior YottaDB releases remains unchanged.

- For direct mode, as well as utility programs, YottaDB can optionally use GNU Readline, if it is installed on the system. This includes the ability to access and use command history from prior sessions.

- Listening TCP sockets can be passed between processes.

- The

ydbinstall/ydbinstall.shscript has multiple enhancements.

In addition to enhancements and fixes made by YottaDB, r2.00 inherits numerous other enhancements and fixes from GT.M V7.0-000 and V7.0-001, all described in the Release Notes.

Graphical Monitoring of Statistics Shared by ProcessesQuick Start

Monitor the shared database statistics of your existing applications in minutes, starting right now.

- Ensure that node.js is installed.

- Use the ydbinstall script to install the YottaDB GUI. (This also installs the YottaDB web server.)

- Ensure that in the environment of each application process, the variable ydb_statshare is set to 1.

- Start the YottaDB web server using the same global directory as your application.

- Connect to the web server to start the YottaDB GUI. In the Dashboard, choose Database Administration / Monitor Database. Choose the data you want to monitor, and choose how you want it displayed. Click Start to see the data.

The GUI comes with a demo that includes a simulated application. This video walks you through using the demo.

Read on to dig deeper.

Motivation

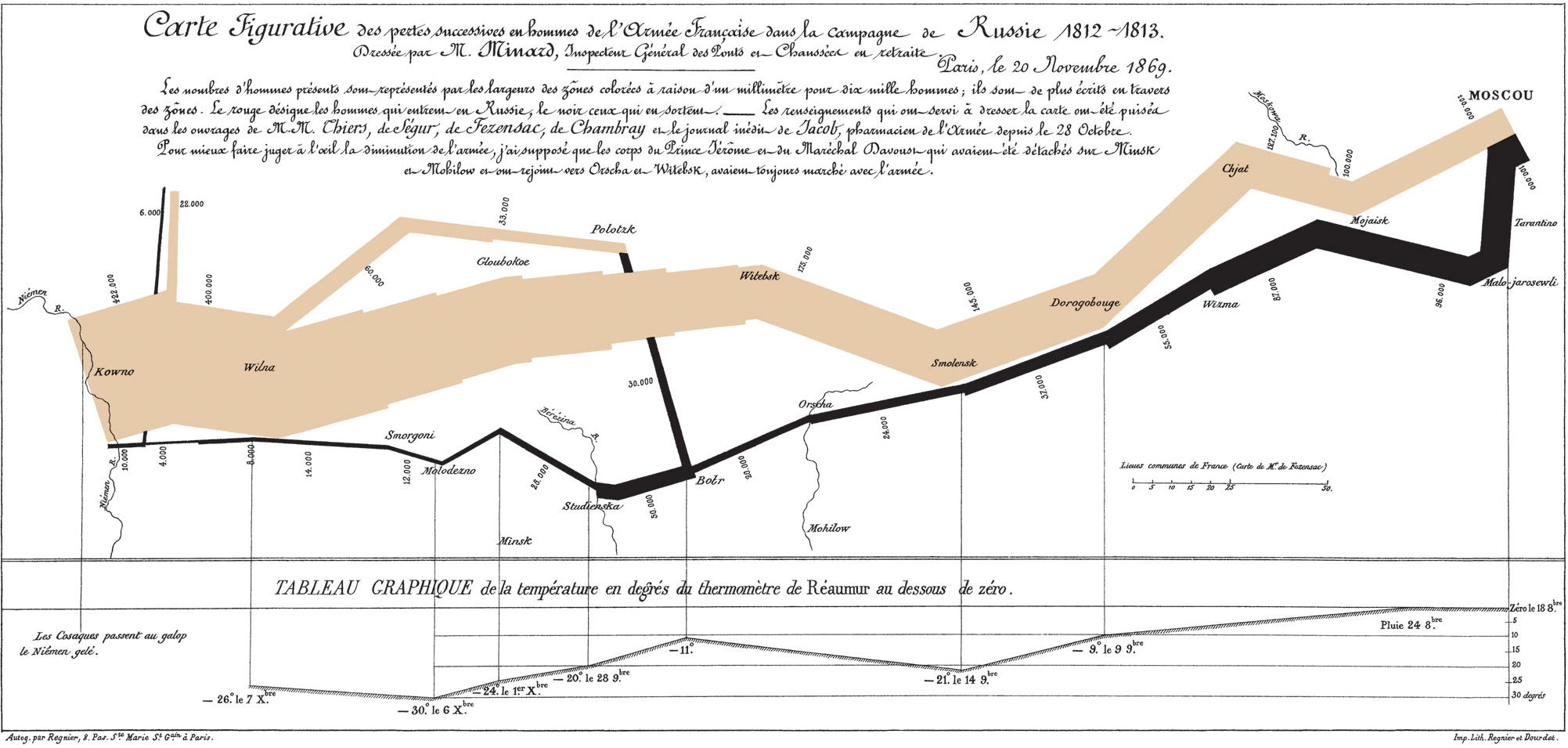

Visual presentation is the most effective way most of us ingest complex data. As with the unique Minard depiction of Napoleon’s disastrous march on Moscow shown here, we routinely use graphs every day.

As with the unique Minard depiction of Napoleon’s disastrous march on Moscow shown here, we routinely use graphs every day.

Processes accessing databases have tens of internal counters (collectively referred to as “statistics”) for each database file that they have open. The YottaDB GUI allows you to visually monitor these statistics in real time.

Statistics

There are two sources of statistics to monitor: statistics shared by processes and statistics in the database file header. Each has its uses.

Shared Statistics

YottaDB processes can opt to share their operational database statistics. If at process startup, the environment variable ydb_statshare is 1. Optionally, the environment variable ydb_statsdir can be set to a temporary directory for sharing and monitoring.

File Header Statistics

Statistics in the database file header capture the aggregate data of all processes accessing the database from the database creation. Viewing these statistics requires access to the database file but does not require application processes to share statistics.

Monitoring

Shared Statistics

Monitoring statistics shared by processes enables focused analysis, for example, visualization of current performance issues and the behavior of specific processes. The YottaDB GUI provides graphical monitoring of shared statistics.

With the intent of ensuring that it is intuitive to use, the GUI has integrated online documentation, including mouse-overs, but no separate user documentation other than installation instructions.

This video on this page walks you from start to finish, to graphically monitor statistics of an existing application on a remote server.

If you want to implement your own monitoring of shared statistics, YottaDB provides a %YGBLSTAT() utility program.

File Header Statistics

For production instances, we recommend continuously capturing statistics every minute or so. You can use the gvstat program program or write your own similar program. Capturing the data and creating baselines will help you in capacity planning as well as incident analysis. Continuously monitoring and displaying key parameters can additionally give you insight into the dynamic behavior of your application.

The guest blog post YottaDB Dashboard by Ram Sailopal demonstrates monitoring File Header Statistics with Grafana.

Security

Statistics are metadata, not data. While metadata should be shared advisedly, it does not typically have the same confidentiality restrictions as data. The GUI and web server follow normal YottaDB security policies.

- Processes must opt-in to share statistics.

- The web server process must be started by a userid on the system, and has no access capabilities beyond those of that userid.

- Database monitoring can be performed by a read-only GUI. You can see from the videos that the GUI is operating in read-only mode.

- While the GUI does have a read-write mode, for example, to support editing of global directories, database monitoring requires just read-only access.

- To access statistics, the web server needs access to the global directory and the $ydb_statsdir directory if it is specified. You can use Linux permissions to control access.

- The JavaScript libraries used are mature, versioned, and statically served from where the GUI is installed on the server.

Please Try It!

We invite you to use the GUI to monitor database statistics shared by your application processes and tell us what you think. As it is a new application, we are sure that it offers many opportunities for enhancement and improvement.

- If you have YottaDB support, please reach out to us through your YottaDB support channel.

- If you do not have YottaDB support, you can reach out to us:

- On the gui channel of our Discord server.

- By creating an Issue on the YDBGUI project at Gitlab.

Thank you for using the YottaDB GUI.

Making lua-yottadb FastBerwyn Hoyt

We thank Berwyn Hoyt for his first guest post on the YottaDB blog, and hope there are many more to follow. If you would like to post on the YottaDB blog please contact us at info@yottadb.com.

TLDR: YottaDB is a fast and clean database and it deserves a Lua API that is as fast as possible. This article discusses how we improved lua-yottadb to go ~4× as fast when looping through database records, and a stunning 47× as fast when creating Lua objects for database nodes, plus other improvements (results here). Low-hanging fruit aside, the biggest (and trickiest) improvement was caching the node’s subscript array in the Lua object that references a specific database node. Finally, porting to other language wrappers is discussed, as well as a tentative thought on how YDB might support an even faster API. Along the way we learned numerous things that might help someone port these efficiencies to other languages.

I had recently released v1.0 of lua-yottadb,1 a syntax upgrade to a tool that gives Lua easy access to YottaDB. Then in Oct 2022, I got my first user: Alain Descamps, of the University of Antwerp Library (sponsors of lua-yottadb), and a heavy user of YottaDB. An actual user. Brilliant!

But the euphoria didn’t last long. Mere hours later, I got Alain’s first benchmark: Lua was ¼ of the speed of YottDB’s native language (M) at simply traversing (counting) database records on our dev server. Even worse, when run on my local PC’s database, Lua was ⅛th the speed of M. Grrr… So I embarked on what I thought would be a 5-day pursuit of efficiency … but it took 25 days! Nevertheless, I did get the dev server’s Lua time down from 4× to 1.3× the duration of M. A very nice result.

What was the problem?

Alain’s Lua benchmark script was simple – akin to this:

ydb = require('yottadb')

gref1 = ydb.key('^BCAT')('lvd')('')

cnt = 0 for x in gref1:subscripts() do cnt=cnt+1 end

print("total of " .. cnt .. " records")

If you’re new to YottaDB or M, to understand what’s going on, you need to know that YottaDB database nodes are represented by a series of ‘subscript’ strings, just like a file-system path. Whereas a path might be

/root/var/log/file, a YottaDB node would beroot("var","log","nodename")or root.var.log.nodename. Each node can hold a bit of data, further sub-nodes (similar to directories in a file-system), or both.

The program above simply loops through a sequence of 5 million database nodes with subscripts ^BCAT.lvd.<n> like so:

^BCAT("lvd",1)

^BCAT("lvd",2)

^BCAT("lvd",3) ...

To do so took 20 seconds in Lua, and 5 seconds in M. A quick code review of lua-yottadb found a number of low-hanging fruit in the gref1:subscripts() method above. The issues were that for every loop iteration, gref1:subscripts() did this:

- Checked each subscript in the node’s ‘path’ at the Lua level to make sure it was a valid string.

- Checked each subscript again at the C level.

- Converted the subscript array into a C array of string pointers (for calling the YottaDB API), then discarded the C array.

Yep. All that happened at every iteration.

Next, I built some benchmarks tests to track our improvement, then got stuck into improving things.2 Avoiding number (3) would require caching of the C array. But we could avoid (1) and maybe (2) by checking them just once at the start of the loop. This was fairly quickly done, with respectable improvements to iteration speed, including:

- 25% faster type checking of function parameters using table-lookup rather than a for-loop to find valid types

- 50% faster by not re-checking subscripts at the Lua level every iteration

But what if we wanted to improve every single database operation, not just iteration? The benchmarks showed that there were two slow tasks critical to every single database operation: a) converting the subscript list to C, and b) creating new Lua nodes.

As an example of the latter, let’s create a Lua-database object:

guy = ydb.node('demographics').country.person[3]

We can do all kinds of database activities on that node, for example:

node:lock_incr()

node:set('Fred')

node:lock_decr()

or even create subnodes:

guy.gender:set('male')

guy.genetics.chromosome:set('X')

Each '.' above creates a new Lua subnode object (in this case, genetics and then chromosome) before you can finally set it to 'X'. You can imagine that a Lua programmer will be doing a lot of this, so it’s a critical task: we need to optimise node creation.

To achieve this we needed to find the ‘Holy Grail’: fast creation of cached C arrays. That would extend these benefits to every database function.

My early wins made it feel like I was about half way there. I’d told my employer the efficiency task would take 5 days. By this time I’d used up about half of that, and I thought I was on track. All that was left was to cache the subscript list. I mean, how hard can it be to cache something? You just have to store the C array when the node is first created, and use it again each time the node is accessed, right? Little did I know!

Caching subscripts: a surprisingly daunting task

Achieving these two goals together proved to be so difficult that it took me three rewrites.

At the outset, each node already held all its subscripts – but in Lua, rather than C. The node object looked like this:

node = {

__varname = "demographics"

__subsarray = {"country", "person", "3", "gender"}

}

Be aware that creating a table in Lua is slow compared to a C array: it requires a malloc(), linkage into the Lua garbage collector, a hash table, and creation of a numerical array portion. And here we need two of them (one for the node object itself, and another for the __subsarray). So it’s relatively slow. But at this point I didn’t know that this was the speed hog.

Iteration 1: An array of strings

In the first 2 iterations, I simply stored the C cache-array as a userdata field within the regular node object as follows (userdata is a Lua type that represents a chunk of memory allocated by Lua for a C program):

node.__cachearray = cachearray_create("demographics", "country", "person", "3", "gender")

Since Lua already referenced the strings in __subsarray (presented previously), my function cachearray_create() just had to allocate space for it: malloc(depth*string_pointers), and point them to the strings already existing in Lua. But this would mean I had to retain the __subsarray table to reference these strings and prevent Lua from garbage-collecting them while in use by C.

Although this caching would speed up node re-use, adding __cachearray to the node would actually slow down node creation time. To prevent the slow-down, I noticed that each child node repeats all its parent’s subscripts. So I saved both memory and time, by making each child node object contain only its own rightmost subscript __name and point to __parent for the rest:

node = {

__varname = "demographics"

__name = "gender"

__parent = parent_node -- in this case, "3"

}

This way I avoid having to create the whole Lua __subsarray table for each node. So each node contains a linked list to its __parents: gender -> 3 -> person -> country.

If you’re getting bored at this point, I suggest you skip to iteration 3.

Segfaults and Valgrind

That was the design for iteration 1. But the __parent made it complicated, because I had to create the cache-array by recursing backwards into all the node’s ancestors. This complexity hid a nasty segfault that I couldn’t find for a long time: the kind of C pointer bug where the symptom occurs nowhere near the cause. (I should have used valgrind myprog to help find it, but I hadn’t used Valgrind before, and I didn’t realise how dead simple it was to use. Later, I needed it again, and discovered that using it is true bliss.)

Anyway, the bug ended up being a case of playing “where’s Wally” except with hidden asterisks. The code was: malloc(n_subscripts * sizeof(ydb_buffer_t*)), except I shouldn’t have included the final * because the YDB API uses an array of buffer structs, not pointers to structs. In the end I found the bug by manually running Lua’s collectgarbage() – which often makes memory errors occur sooner rather than later.

Fast traversal: ‘mutable’ nodes

Finally, we had a node with cache – for fast access to the database. But we still didn’t have fast node traversal, like in Alain’s tests. This is because each time you iterate Alain’s for loop, you have to create a new node: ^BCAT(“lvd”,1) ^BCAT(“lvd”,2), etc. So I made the function cachearray_subst() re-use the same array, altering just the last subscript.

But changing a node’s subscripts is dodgy. It makes the same node object in Lua refer to a different database node than it used to. Imagine that you’re the programmer and have stored that Lua object for use later (e.g., when scanning through to find the highest value node: maxnode = thisnode).3 You’ll still be expecting the stored maxnode to point to the maximum node.

Enter the concept of a ‘mutable’ node, which the programmer explicitly expects to change. Lua iterators like pairs() can now return specifically mutable nodes. The programmer can convert this to an immutable node if they want to store it for use after the loop, or they can test for mutability using the ismutable() method.

Well, it worked. Now we have a lightning-fast iterator, and in most cases the programmer doesn’t have to worry about mutability.

Garbage collection & Lua versions

All that remained was to tell Lua’s garbage collector about my mallocs. In Lua 5.4 this would have been easy: just add node method __gc = cachearray_free to the object. But __gc doesn’t work in Lua 5.1 on tables (which is what our node object is), and we wanted lua-yottadb to support Lua 5.1 since LuaJIT is stuck on the Lua 5.1 interface – so some people still use Lua 5.1. Instead of simply setting __gc = cachearray_free, I had to allocate memory using Lua’s “full userdata” type, which is slower than malloc, but at least it provides memory that is managed by Lua’s garbage collector.4

Lastly, I wrote some unit tests, and I thought I’d be done. But node creation wasn’t really any faster.

Iteration 2: A shared array of strings

At this stage I’d already spent 12 days: over twice as long as I’d anticipated. That’s not too outrageous for a new concept design. Strictly, I should have told my employer that I was over-budget, so they could make the call on further development. But I was embarrassed that my node creation benchmark was not really faster than the original. We had fast iteration now, but I had anticipated that everything would be faster. Something was wrong, and I decided to just knuckle down and find it.

At this point I made a mistaken judgment-call that cost development time. I guessed (incorrectly) that the speed issues were because each node creation had to copy its parent’s array of string pointers. Instead of verifying my theory, I implemented a fix, adding complexity as follows.

Each child node retained a duplicate copy of the entire C array of string-pointer structs. But this seemed unnecessary since each child added only one subscript string at the end. Let’s keep just one copy of the C array and have each child node reference the same array but keep its specific depth as follows:

array = cachearray("demographics", "country", "person", "3", "gender")

root_node = {__cachearray=array, __depth=1}

country_node= {__cachearray=array, __depth=2}

person_node = {__cachearray=array, __depth=3}

id_node = {__cachearray=array, __depth=4}

gender_node = {__cachearray=array, __depth=5}

This works, but adds some complexity, because if you create alternate subscript paths like: root.person.3.male and then person.4.female. Then the code has to detect that the cache array is already used at subscript 3, so you can’t change it to 4, and you have to create a duplicate cache-array after all. It also complicates the Lua code because the C code now has to return a depth as well as the array.

Although it does speed up node creation, it’s still not as much as expected, because it’s also slowing down node creation simply by adding the __depth field to the object.5

Iteration 3: A breakthrough – the complete object in C

Up to this point I had been assuming I needed a Lua table to create a Lua object. After all, it seemed so efficient to make C just point to the existing Lua strings; and for that I needed Lua to reference those strings to keep them from being garbage collected: hence a Lua table.

But now I finally did some more benchmarking and showed Lua table creation to be the speed hog. Remember: it does a malloc(), links to the Lua garbage collector, creates a hash table, and a numerical array portion. Plus, we’re adding three hashed fields, which are not exactly instant: __parent, __cachearray, and __depth.

It sure would be much faster if we could store all this data inside a C struct. So I read the manual again and discovered that the userdata type can be a Lua object all by itself. I should have guessed this from the start. You can assign a metatable to a userdata – which means that you can give it object methods – which means it can actually be the node object, all by itself. No need to create a Lua table for a C object at all.

Implementing this, my userdata C struct now looks something like this:

typedef struct cachearray_t {

int subsdata_alloc; // size allocated for strings (last element)

short depth_alloc; // number of pre-allocated array items

short depth_used; // number of used items in array

ydb_buffer_t subs_array[]; // struct reallocated if this exceeded

char subsdata[];

} cachearray_t;

I pre-allocated space for extra slots (5, by default, before needing reallocation). Thus, when you create ydb.node(“demographics”) you can follow that up with .country.person[3].female and all these subsequent subscripts get stored in the same, previously allocated C-array.

Notice that this struct contains two expanding sections (it’s really two separate structs): the array of string pointers subs_array and the actual string characters subsdata. It would be better to keep these in a single array of structs, and thus have just one expanding section. But we cannot do that because we need an array of ydb_buffer_t to pass to the YDB API. These two expanding sections add complexity to the code, but don’t slow it down. It would be simpler to allocate two userdata sections: one for each section – but that would slow it down.

Also notice that since subsdata now stores subscript strings in my C userdata, I don’t need to keep a Lua table that references their Lua copies, which are now set free.

Anyway, this cache-array can now hold subscripts for several nodes. But I still need to store the depth of each particular node somewhere. For this, I have a ‘dereference’ struct which points to a cache-array and remembers the depth of this particular node.

typedef struct cachearray_dereferenced {

struct cachearray_t *dereference; // merely points to a cachearray

short depth; // number of items in this array

} cachearray_dereferenced;

For the root node, I store both the cache-array and this dereference struct in the same userdata. Child nodes only need the dereference struct.6 This dereferencing does add some complexity, but it’s worth it to avoid proliferating duplicate cache-arrays, which would fill up CPU cache and slow things down.

Finally, all subscript strings are cached all in C, and I only need to create a userdata for each node, not a table. The irony is that iteration1’s original motivation to re-use Lua strings was a false economy. It turns out that it’s just as fast to copy the strings into C as it is in Lua to do the necessary check that all subscripts are strings. And it doesn’t even waste any memory, because the Lua strings can then be garbage collected instead of held by reference.

By now, I’ve taken 25 days to implement this thing. I’m going to have some serious explaining to do to my employer. That, in fact, is how this article began.

Iteration 4: The gauntlet challenge – cheap node creation

Virtually instant subnode creation is possible if light userdata were used for it. However, these nodes could never be freed since __gc finalizer methods do not work on light userdata in Lua. Can anyone think of a workaround?

Consider a Lua object for database node demographics. Subnodes can be accessed using dot notation: demographics.country.person. Even with our latest design, subnodes still have the overhead of allocating a full userdata. But Lua has a cheaper type called a light userdata: which is nothing more than a C pointer, and free to create. We just need to pre-allocate space for several dereferenced subnodes (shown below) within the parent node’s userdata, and child nodes could simply point into it:

typedef struct cachearray_t {

cachearray_dereferenced[5];

<regular node contents> ...

This will finally make full use of that mistaken judgment-call I made early on, and re-use pre-allocation to ultimate effect.

But there’s a gotcha. Since a light userdata object has no storage, Lua doesn’t know what type of data it is, and therefore what metatable (i.e. object methods) to associate with it. So there’s a single global metatable for all light userdata objects. No matter: we can still hook the global metatable, and then double-check that it points to a cache-array, before running cache-array class methods on it. Should work fine.

Node creation time in lua-yottadb v2.1 is already 47× as fast as v1.2, but I’m anticipating this improvement will increase that to 200x, making dot notation virtually free. This will also keep all allocated memory together in one place: also better for CPU caching.

This hack would actually work … except for one problem: it can’t collect garbage. Tragically, Lua ignores the __gc method on light userdata. This means we’ll never be able to remove the light userdata’s reference to its root node. Which creates a memory leak. Here’s an example to explain:

x = ydb.node("root").subnode

x = nil

First the root node is created; then subnode references root; then Lua assigns subnode to x so that x now references subnode. Finally, x is deleted. The problem is that when x is garbage-collected, Lua does not collect light userdata subnode (which is still referencing root). So root is not collected: a memory leak.

Can any of my readers see a solution to this puzzle? I’m throwing down the gauntlet. Find a way to work around Lua’s lack of garbage collection on light userdata, then post it here, and I’ll make you famous on this blog. 😉

On the other hand, Mitchell has rightly pointed out that this is probably premature optimisation. After profiling, you can easily recover the speed in time-critical code by simply giving up dot notation and creating the node object all at once with ydb.node("demographics", "country", "person").

Portability: Python, etc.

In theory, my final cachearray.c is the best version to port to another language without a complete re-write because it now keeps its strings entirely in C – which means it’s fairly self-contained and portable. Having said that, it will need changes in how it receives function parameters: which is from the Lua stack. The Python/etc. portion of the wrapper will also need extensions to support cache-arrays.

A quick look at Python’s YDBPython, for example, shows that its C code has the same design as the original lua-yottadb – and is probably slow. Every time it accesses the database, it has to verify your subscript list, and copy each string to C. Unlike lua-yottadb, YDBPython also does an additional malloc() for each individual subscript string. Caching the subscript array could provide a significant speedup, just as it did for Lua.

The Python code to create an object also has the same low-hanging fruit as lua-yottadb. But YDBPython has an additional easy one-line win by using __slots__, a Python feature not available in Lua. A quick benchmark tells me that using __slots__ makes python bare object creation 20% faster (though it needs another 25% to be as fast as Lua’s object creation using userdata).

At this stage I do not know much about Python’s C API: neither about Python’s alternatives to userdata objects, nor whether Python has a faster way of implementing dot notation without creating intermediate nodes.

YDB API overhead: a suggestion

After all this work, why is M still faster? I suspect that lua-yottadb is now about as fast as it can get. So why is database traversal in Lua still 30% slower than M (on our server), when a basic for loop in Lua is 17× as fast as M? My hunch was that there are subscript conversion overheads on the YottaDB side of the YottaDB-C API, that M doesn’t incur since YottaDB includes both the YottaDB database and a complete implementation of the M language. I had a chat with Bhaskar, founder of YottaDB, and he was able to confirm my hunch.

Since YottaDB includes both the database engine and the M runtime system, it can make optimizations that another language runtime system cannot make when accessing the database. At a high level, that is why database access from non-M languages can get close to M, but cannot quite match it.

For example, since a value can have multiple representations, e.g., 1234 can be both a string as well as a number, with a cost to convert between representations, YottaDB stores metadata about data that includes the representations it already has. This is a classic trade-off of space for speed. This, and other optimizations, give M an edge over other languages when accessing YottaDB.

However, while database access specifically may be faster in M, performance should always be considered in the context of complete applications, any of which will execute a substantial amount of logic other than just database access. The fact that a basic for loop in Lua is so much faster than one in M may suggest that a complete application coded in Lua will outperform an equivalent application coded in M. So, my employer and I, as well as YottaDB, consider this exercise to be a success.

My work on this project suggests a possible avenue for an enhancement to YDB itself that may be worth considering. Given that languages like Lua and Python access data through node objects, one possibly way to improve performance would be for YDB itself to expose a function to cache a subscript array, storing it as mvals within YDB:

handle = ydb_cachearray("demographics", "country", "person", "3", "gender")

This would return a handle to that YDB-owned cache-array, which could then be supplied to subsequent YDB API calls as the root operating node, instead of the GLVN, varname. This would allow rapid access to the same database node, or rapid iteration through subnodes, without having to re-convert all 5 subscripts on every call.

Of course, this is just a theory. Profiling would first be needed to check whether this conversion process is the actual cause of the speed differential, and how much this solution would typically help.

Lessons

Perhaps most significantly, this article raises some of the significant issues that efficiency improvements in any language will have to work through. Hopefully, this will allow someone to implement it in iteration 1, rather than iteration 3.

Here are a few other take-homes from this experience:

- Always test your theories about what’s causing the slow-down before implementing a complete fix.

- Choose benchmarks relevant to your applications, because those will be what you optimise.

- Communicate with your employer early, even if it’s embarrassing: even the reporting process might expose your assumptions. (I knew this already, but pride got in the way 😳).

- Useful details about implementing a Lua library in C: speedy userdata, light userdata, and Valgrind for emergencies.

I sure did learn a lot through this process, and I hope you’ve learned something, too.

About Berwyn Hoyt

Berwyn Hoyt is a senior embedded systems engineer, having co-founded two startups in embedded systems. He has lived in the USA and New Zealand most of his life, but has recently moved to Sydney, to be with his wife who now works for a church there. Berwyn loves low-level, efficient software, and puzzles that are difficult. He especially loves ring-and-string puzzles, and has recently had a breakthrough on the extremely difficult Quatro puzzle (pictured), which has amused him, sporadically, for a decade. He has now solved the 3-ring version and is getting ready to tackle all 4 rings.

(All photos courtesy Berwyn Hoyt.)

YottaDB r1.38 is a minor release that includes functionality needed at short notice by a customer.

- A MUPIP REPLICATE option provides for a replication stream to include updates made by triggers on the source instance.

- $ZPEEK() and ^%PEEKBYNAME() provide direct access to an additional process-private structure.

- The

-guioption ofydbinstall/ydbinstall.shinstalls the YottaDB GUI. - Changes to subscripts and more meaningul SQL column names make %YDBJNLF more useful.

The %YDBJNLF utility program, which was released as field-test software in r1.36, is considered Supported for production use in r1.38. Also, the Supported level of Ubuntu on x86_64 moves up from 20.04 LTS to 22.04 LTS.

While all YottDB software is free to use under our free / open-source software licensing, r1.38 illustrates the value of being a YottaDB customer rather than a user: in addition to support with assured service levels, we will work with you to prioritize enhancements and fixes you need.

As with all YottaDB releases, there are a number of fixes and smaller enhancements, as described in the release notes.

We also invite you to try the new YottaDB GUI.

YottaDB r1.34 ReleasedWhile YottaDB r1.34 is an otherwise modest successor to r1.32, internal changes allow the popular programming language Python to be fully Supported. We are excited about making YottaDB available to the large Python user community. Here is a “Hello, World” Python program that updates the database and illustrates YottaDB’s Unicode support:

import yottadb

if __name__ == "__main__":

yottadb.set("^hello", ("Python",), value="नमस्ते दुनिया")

The Python wrapper can be installed with pip install yottadb. Full details of the API are in the Python wrapper user documentation. The current Debian Docker image at Docker Hub includes the Python wrapper. We thank Peter Goss (@gossrock) for his contributions to the Python wrapper.

Python joins C, Go, M, node.js, Perl, and Rust as languages with APIs to access YottaDB.

Owing to an internal change required to support the Python wrapper, application code written in Go and Rust will need to be compiled with new versions of the Go and Rust wrappers. We anticipate no regressions, and apologize for the inconvenience.

As discussed in our blog post Fuzz Testing YottaDB, adding a new type of testing exposed bugs previously neither encountered in our development environment nor reported by a user. Although fuzz testing generates syntactically correct but semantically questionable, contorted code that is unlikely to be part of any real application, the bugs are nevertheless defects. r1.34 includes the first tranche of fixes. As we are dedicating hardware to continuous fuzz testing, future YottaDB releases will include fixes for bugs found by fuzzing. We thank Zachary Minneker of Security Innovation for Fuzz Testing YottaDB and bringing its benefits to our attention.

In addition to fixes for issues, whether found by fuzz testing or otherwise, YottaDB r1.34 has enhancements that make it faster and more friendly, e.g.,

– Faster stringpool garbage collection, thanks to Alexander Sergeev and Konstantin Aristov (@littlecat).

– HOME and END keys work in direct mode for READ, thanks to Sergey Kamenev (@inetstar).

– Multiple improvements to ydbinstall / ydbinstall.sh.

– Enhancements to ydb_env_set to improve performance under some conditions and to be compatible with existing environments created without ydb_env_set.

– Enhancements to the %RSEL utility program.

YottaDB r1.34 also inherits enhancements and fixes from GT.M V6.3-011.

Details are in the release notes.

YottaDB r1.32 ReleasedAlthough there is no single theme to YottaDB r1.32, it qualifies as a major release because it includes a significant number of enhancements, including several to enable the Application Independent Metadata plugin. The plugin provides functionality for applications to push responsibility for maintaining cross references and statistics to YottaDB triggers, thereby reducing the code that applications must maintain. Enhancements include:

- The $ZYSUFFIX() function enables applications to create variable and routine names that are guaranteed for all practical purposes to be unique, as it uses the 128-bit MurmurHash3 non-cryptographic hash.

- A $ZPARSE() option to follow symbolic links.

- Sourcing ydb_env_set creates and manages a three-region database, and defaults to UTF-8 mode.

- An option to allow $ZINTERRUPT to be invoked for the USR2 signal.

- The --aim option of ydbinstall / ydbinstall.sh installs the Application Independent Metadata plugin.

- Shell-like word expansion with $VIEW(“WORDEXP”).

- Propagation downstream of context set by triggers.

- ydb_ci_*() functions return the ZHALT argument for C code that calls M code which terminates with a ZHALT.

From the upstream GT.M V6.3-009 and GT.M V6.3-010, which are included in the r1.32 code base, there are enhancements to operational functionality.

There are numerous smaller enhancements that make system administration and operations (DevOps) friendlier, and easier to automate. For example, %PEEKBYNAME() has an option to query global directory segments without opening the corresponding database files, orphaned relinkctl files are automatically cleaned up, and the --octo option of ydbinstall / ydbinstall.sh installs Octo such that octo --version reports the git commit hash of the build.